Dreamer: Bio-Inspired Reinforcement Learning Design

Dreamer represents an exploration into bio-inspired artificial intelligence, focusing on the design of learning systems that mirror natural learning patterns. The project emerged from observations made during a cross-country drive from Boulder to Charlottesville, connecting insights about human sleep patterns with potential innovations in reinforcement learning architecture.

Research Concept

Current reinforcement learning systems often require task-specific training, potentially limiting their ability to develop generalizable knowledge. Human learning, by contrast, involves both active experience gathering and unconscious consolidation during sleep. This observation sparked a key question: Could artificial systems benefit from a similar cyclical learning pattern?

The concept draws parallels between neural network backpropagation and biological memory consolidation during sleep. While traditional networks learn through continuous training cycles, biological systems alternate between experience gathering and memory processing phases. This project proposes investigating whether implementing a similar pattern could enhance learning outcomes in artificial systems.

Proposed Architecture

The system design incorporates two distinct phases of operation:

Experience Collection (“Daytime” Phase)

The active phase would allow the agent to:

- Explore its environment without specific task objectives

- Store experiences in a short-term memory buffer

- Focus on gathering diverse interaction data

- Maintain a running record of state-action pairs

Knowledge Consolidation (“Nighttime” Phase)

During the consolidation phase, the system would:

- Process stored experiences through multiple training iterations

- Reorganize and consolidate learned patterns

- Update its internal model of the environment

- Clear the short-term memory buffer for the next cycle

Technical Framework

The implementation plan leverages several key technologies:

Environment Design

- Custom environment implementing the day-night cycle

- Flexible state and action spaces supporting various interaction types

- Configurable cycle duration and transition parameters

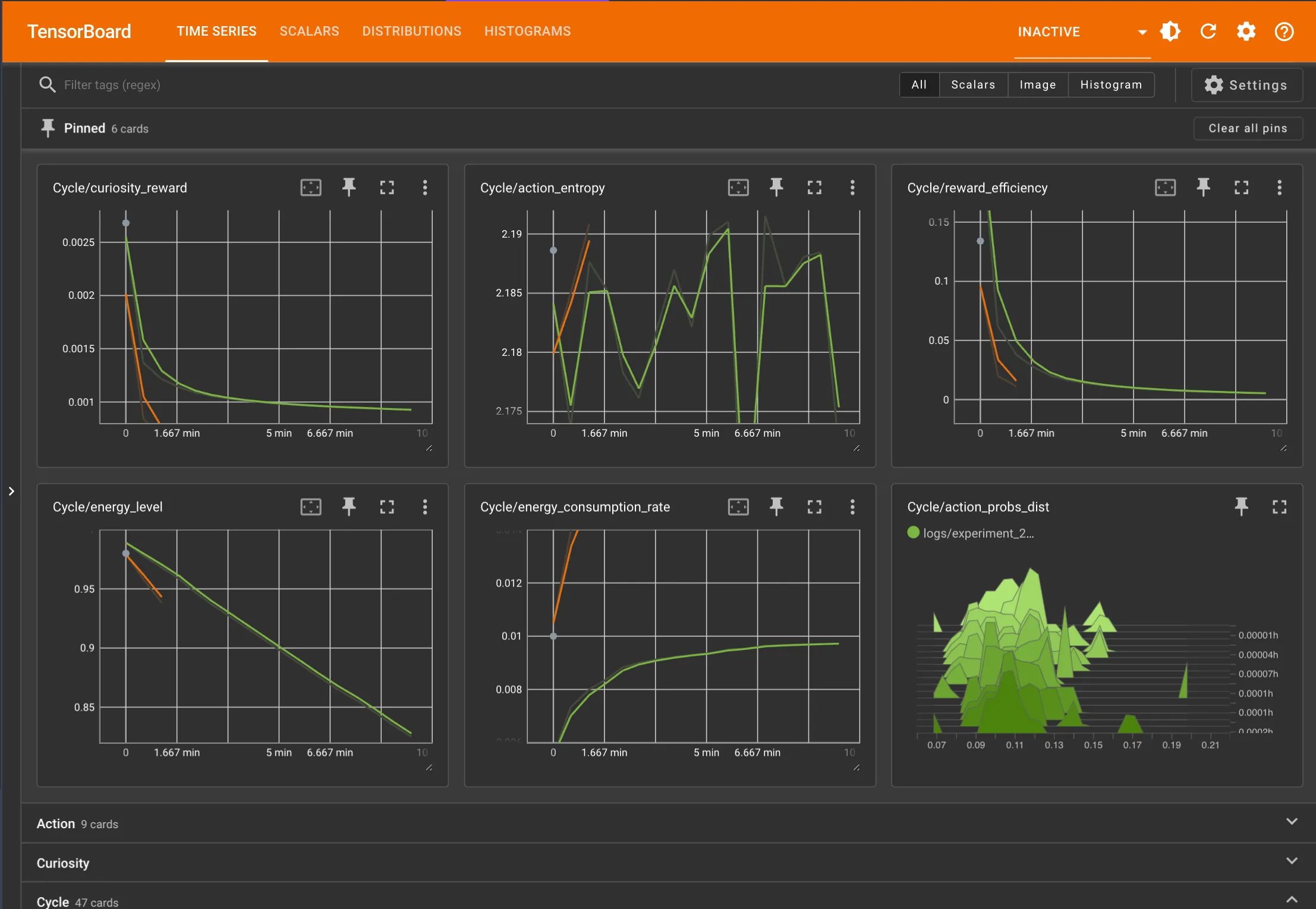

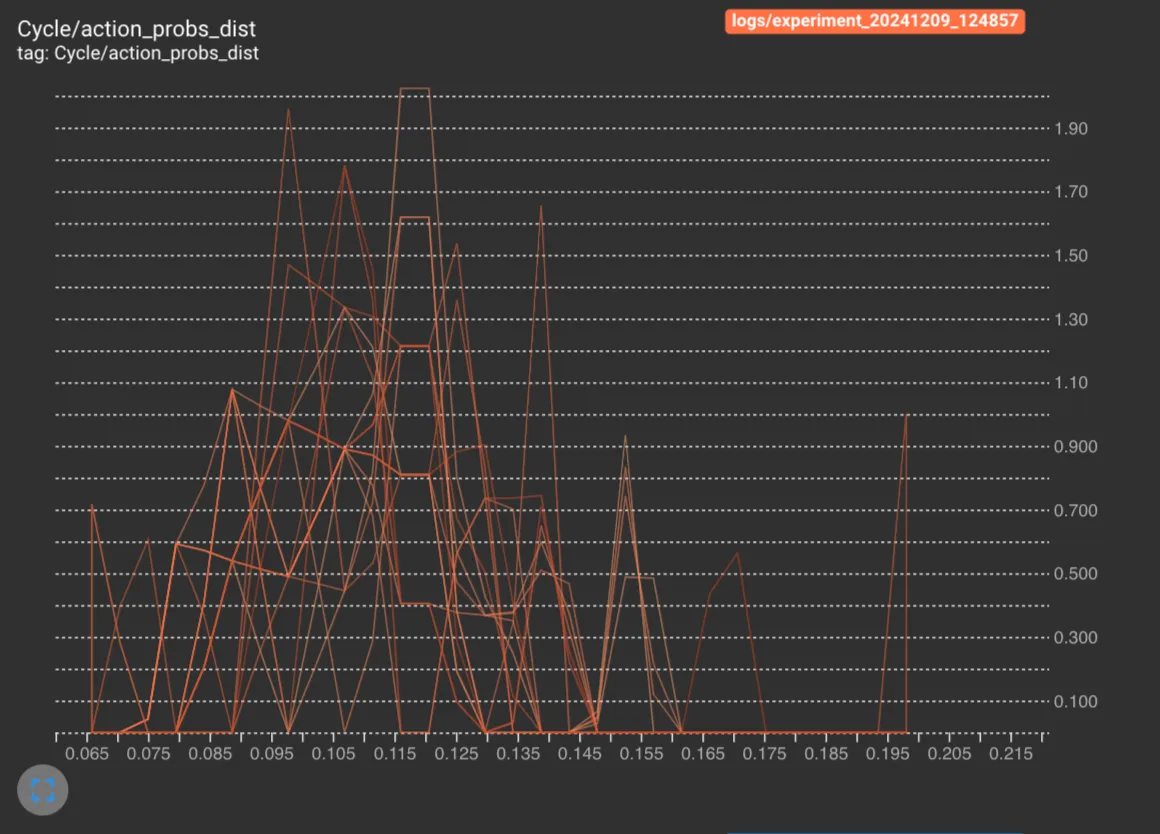

- Integrated monitoring and visualization tools (tensorboard)

Learning Architecture

- PyTorch-based neural network implementation

- Custom memory management system for experience storage

- Dedicated training loops for day and night phases

- Comprehensive logging and analysis capabilities

Design Considerations

The development process has highlighted several key areas requiring careful consideration:

Memory Management

The system needs efficient mechanisms for storing and processing experiences while maintaining reasonable memory constraints. Current design work focuses on implementing a rolling buffer system with priority sampling.

Training Stability

Balancing exploration during the day phase with effective consolidation during the night phase presents complex design challenges. The implementation will need to carefully manage learning rates and hyperparameters based on cycle phases.

Next Steps

The project’s immediate development priorities include:

Implementation

- Building the core environment and agent architecture

- Developing the experience collection and storage system

- Implementing the training cycle mechanisms

- Creating monitoring and analysis tools

Evaluation

- Designing experiments to test knowledge transfer capabilities

- Developing metrics for assessing generalization

- Planning comparative studies with traditional training approaches

This research design aims to contribute to our understanding of bio-inspired learning systems. By exploring the potential benefits of sleep-like consolidation phases in artificial learning, the project may offer insights into more efficient and adaptable AI training methods.